Children and parents will be notified if their child sends or receives sexually explicit photos through their Apple Messages app, thanks to new tools being developed by the company.

A number of new technologies are being introduced by Apple with the goal of limiting the spread of Child Sexual Abuse Material (CSAM) across the company’s platforms and services are included in the feature.

As a result of these advancements, Apple will be able to detect known CSAM images on its mobile devices, such as the iPhone and iPad, as well as in photos uploaded to iCloud, while maintaining consumer privacy.

The new Messages feature, on the other hand, is intended to empower parents to take a more active and informed role in guiding their children through the process of learning how to communicate effectively online.

After receiving a software update later this year, Messages will be able to use on-device machine learning to analyze image attachments and determine whether or not the photo being shared contains sexually explicit content (or not).

Because all of the processing takes place on the device, Apple is not required to access or read the child’s private communications as a result of this technology.

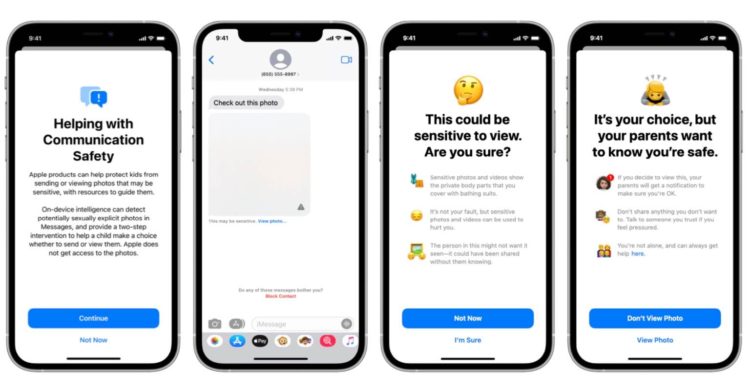

The image will be blocked if a sensitive photo is discovered in a message thread. A label will appear below the photo stating “this may be sensitive” and a link to click on in order to view the photo will appear below the image. If the child chooses to look at the photograph, another screen with additional information appears. On the other hand, a message warns the child that sensitive photos and videos “show the private body parts that you cover with bathing suits” and that “it’s not your fault, but sensitive photos and videos can be used to harm you.”

These warnings are intended to assist the child in making the best decision possible by opting not to view the content in question.

It is possible that these types of features will aid in the protection of children from sexual predators, not only by introducing technology that interrupts communications and provides advice and resources but also by alerting parents to the presence of a predator.

Apple’s technology could be beneficial in both situations by intervening, identifying, and alerting users to explicit materials that are being shared online.

Apple says the new technology will be made available as part of a software update later this year to accounts set up as families in iCloud for iOS 15, iPadOS 15, and macOS Monterey in the United States, which will be released later this year.

Read more on Tech Gist Africa:

WhatsApp adds a new feature to make photos and videos disappear

Amid backlash, Facebook says it will continue to develop Instagram for children under the age of 13